Introduction

Welcome back to our blog series on Scikit-Learn Pipelines!

After exploring the fundamentals of pipelines in part 1

and discussing the necessity of robust preprocessing steps for various data types in part 2,

today we're diving deep into a tool that elevates data preprocessing in Scikit-Learn to a new level: the

In the previous article, we saw the importance of applying different preprocessing steps to different columns in our dataset. We sometimes resorted to manually selecting columns and creating separate pipelines for numerical and categorical features. While this works, it can quickly become cluttered and error-prone, especially as our dataset grows and becomes more complex. This is precisely where the ColumnTransformer comes in to offer us an elegant and robust solution.

The ColumnTransformer: A Conductor for Your Data

The

-

The main advantages of the

- Organize and Improve Readability: It centralizes all preprocessing logic, making our code much cleaner and easier to understand.

- Enhance Robustness: It enables us to apply specific transformations to precisely the columns they are intended for, thereby avoiding potential errors caused by accidentally applying a transformation to the wrong data type.

- Improve Maintainability: Changes to the preprocessing of specific columns can be made in isolation without affecting the rest of the pipeline.

Now, let's take a look at a concrete example to see the power of the

Important Note

The code shown is intentionally kept simple to illustrate the functionality of the

Application Example: Comprehensive Data Preprocessing with ColumnTransformer

Consider the following code, which demonstrates how we can use the ColumnTransformer to apply various preprocessing steps to a sample dataset:

import pandas as pd

from sklearn.compose import ColumnTransformer

from sklearn.impute import SimpleImputer

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import (

FunctionTransformer,

MinMaxScaler,

OneHotEncoder,

)

from preprocessing import PostalCodeBinTransformer, remove_special_chars

# Path to the raw data

DATA_PATH = "data/data.xlsx"

# Read data and convert postal_code to integer

raw_data = pd.read_excel(DATA_PATH, converters={"postal_code": int})

# Define numerical columns

COLS_TO_ENCODE = ["age", "experience_in_years", "salary_in_dollar"]

# Pipeline for numerical data: imputation and scaling

numerical_pipeline = Pipeline(

[("imputer", SimpleImputer(strategy="mean")), ("scaler", MinMaxScaler())]

)

# Transformer for special characters in job titles

special_char_remover = FunctionTransformer(

remove_special_chars,

validate=False,

feature_names_out="one-to-one",

).set_output(transform="pandas")

# ColumnTransformer for all preprocessing steps

preprocessor = ColumnTransformer(

transformers=[

("numerical", numerical_pipeline, COLS_TO_ENCODE),

("job_title", special_char_remover, ["job_title"]),

("postal_code", PostalCodeBinTransformer(), ["postal_code"]),

("city", "passthrough", ["city"]),

],

remainder="drop", # Remove other columns (e.g., first_name)

verbose_feature_names_out=False,

)

# One-Hot Encoding for categorical features

ohe_transformer = ColumnTransformer(

transformers=[

(

"ohe",

OneHotEncoder(handle_unknown="ignore", sparse_output=False),

["job_title", "city", "postal_code_bin"],

)

],

remainder="passthrough", # Keep numerical data

verbose_feature_names_out=False,

).set_output(transform="pandas")

preprocessed_data = preprocessor.fit_transform(raw_data)

preprocessed_df = pd.DataFrame(

preprocessed_data, columns=preprocessor.get_feature_names_out()

)

cleaned_data = ohe_transformer.fit_transform(preprocessed_df)

# Display results

pd.set_option("display.max_columns", 8)

pd.set_option("display.expand_frame_repr", False)

print(cleaned_data.head())

Let's break down this code step by step:

1. Importing Libraries:

import pandas as pd

from sklearn.compose import ColumnTransformer

from sklearn.impute import SimpleImputer

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import (

FunctionTransformer,

MinMaxScaler,

OneHotEncoder,

)

from preprocessing import PostalCodeBinTransformer, remove_special_chars

We import necessary libraries from

2. Defining Data Path and Reading Data:

# Path to the raw data

DATA_PATH = "data/data.xlsx"

# Read data and convert postal_code to integer

raw_data = pd.read_excel(DATA_PATH, converters={"postal_code": int})

We define the path to our raw data file (

3. Defining Numerical Columns:

# Define numerical columns

COLS_TO_ENCODE = ["age", "experience_in_years", "salary_in_dollar"]

We create a list of column names that we consider numerical features.

4. Creating a Pipeline for Numerical Data:

# Pipeline for numerical data: imputation and scaling

numerical_pipeline = Pipeline(

[("imputer", SimpleImputer(strategy="mean")), ("scaler", MinMaxScaler())]

)

Here, we define a Scikit-Learn

-

SimpleImputer(strategy="mean") : This step will replace any missing values in the numerical columns with the mean of that column. -

MinMaxScaler() : This step will scale the numerical features to a range between 0 and 1.

5. Creating a Transformer for Special Characters in Job Titles:

# Transformer for special characters in job titles

special_char_remover = FunctionTransformer(

remove_special_chars,

validate=False,

feature_names_out="one-to-one",

).set_output(transform="pandas")

We use

- remove_special_chars: This function takes a pandas Series as input and removes any special characters based on a defined regular expression.

-

validate=False We set this toFalse as we are confident in the function's input. -

feature_names_out="one-to-one" This ensures that the output column name is the same as the input column name. -

.set_output(transform="pandas") This ensures that the output of the transformer is a pandas DataFrame.

Explanation of preprocessing.py:

import pandas as pd

from sklearn.base import BaseEstimator, TransformerMixin

def remove_special_chars(series: pd.Series) -> pd.Series:

"""

Remove special characters from a pandas Series.

This function removes special characters from a given pandas Series using

a predefined regular expression pattern. The targeted special characters

include symbols such as `*`, `/`, `!`, `_`, `-`, `.`, `,`, `;`, `?`, `$`,

`%`, `&`, `^`, and others.

Parameters

----------

series : pd.Series

A pandas Series containing string data from which special characters

will be removed.

Returns

-------

pd.Series

A pandas Series with all special characters removed.

"""

pattern = "[*/!_\\-.,;?$%&$^\xc2\xb0]"

cleaned_series = series.replace(pattern, "", regex=True)

return cleaned_series

class PostalCodeBinTransformer(BaseEstimator, TransformerMixin):

"""

A custom transformer that bins postal codes into predefined ranges.

This transformer takes a DataFrame containing a postal code column and

assigns each postal code to a predefined range (bin). The ranges are

intervals of 10,000, and each bin is labeled accordingly.

Attributes

----------

bins : list of int

A list defining the upper limits of each bin. These bins represent

ranges such as 0-10000, 10000-20000, etc.

labels : list of str

A list of labels corresponding to the ranges defined by `bins`. Each

label represents a range in string format, e.g., "0-10000".

Methods

-------

fit(X, y=None)

Fit the transformer (no operation needed).

transform(X)

Transform the input DataFrame by binning postal codes.

"""

def __init__(self):

"""

Initialize the PostalCodeBinTransformer with predefined bins and labels.

The transformer divides postal codes into intervals of 10,000 and assigns

a label to each interval. For example, postal codes in the range 0-10000

are labeled as "0-10000".

"""

self.bins = [

0,

10000,

20000,

30000,

40000,

50000,

60000,

70000,

80000,

90000,

100000,

]

self.labels = [

"0-10000",

"10000-20000",

"20000-30000",

"30000-40000",

"40000-50000",

"50000-60000",

"60000-70000",

"70000-80000",

"80000-90000",

"90000-100000",

]

def fit(self, X: pd.DataFrame, y=None):

"""

Fit the transformer to the data (no operation needed).

This method is provided for compatibility with the scikit-learn

transformer interface. No actual fitting is required for this transformer.

Parameters

----------

X : pd.DataFrame

The input DataFrame.

y : None

Ignored. Exists for compatibility with scikit-learn.

Returns

-------

PostalCodeBinTransformer

The transformer instance itself.

"""

return self

def transform(self, X: pd.DataFrame) -> pd.DataFrame:

"""

Transform the input DataFrame by binning postal codes.

The postal codes in the 'postal_code' column of the input DataFrame are

assigned to predefined bins based on their values. A new column

'postal_code_bin' is added to the DataFrame, containing the corresponding

bin labels.

Parameters

----------

X : pd.DataFrame

The input DataFrame containing a 'postal_code' column to be binned.

Returns

-------

pd.DataFrame

The transformed DataFrame with an additional 'postal_code_bin' column

representing the binned postal code ranges.

"""

postal_codes = X["postal_code"].astype(int)

X["postal_code_bin"] = pd.cut(

postal_codes, bins=self.bins, labels=self.labels

)

return X.drop(columns=["postal_code"])

def get_feature_names_out(self, input_features=None):

"""

Get the names of the output features.

This method is part of the scikit-learn interface and returns the names

of the features generated by the transformer.

Parameters

----------

input_features : None

Ignored. Exists for compatibility with scikit-learn.

Returns

-------

list of str

A list containing the name of the output feature,

i.e., ['postal_code_bin'].

"""

return ["postal_code_bin"]

-

remove_special_chars(series) : This function uses a regular expression to remove a predefined set of special characters from each string in the input pandas Series. -

PostalCodeBinTransformer : This is a custom transformer that inherits fromBaseEstimator andTransformerMixin -

__init__(self) : Initializes the transformer with predefined bins (upper limits of postal code ranges) and labels for these bins. -

fit(self, X, y=None) : This method is required by the Scikit-Learn API but doesn't perform any operation in this transformer. It simply returns the transformer object itself. -

transform(self, X) : This method takes a DataFrameX as input, extracts thepostal_code column, converts it to integer type, and then usespd.cut to bin the postal codes into the predefined ranges. It adds a new columnpostal_code_bin to the DataFrame containing the bin labels and then drops the originalpostal_code column. -

get_feature_names_out(self, input_features=None) : This method is used to define the names of the output features generated by this transformer. In this case, it outputs a single column namedpostal_code_bin .

6. Creating the First ColumnTransformer for Initial Preprocessing:

# ColumnTransformer for all preprocessing steps

preprocessor = ColumnTransformer(

transformers=[

("numerical", numerical_pipeline, COLS_TO_ENCODE),

("job_title", special_char_remover, ["job_title"]),

("postal_code", PostalCodeBinTransformer(), ["postal_code"]),

("city", "passthrough", ["city"]),

],

remainder="drop", # Remove other columns (e.g., first_name)

verbose_feature_names_out=False,

)

Here, we instantiate the

-

("numerical", numerical_pipeline, COLS_TO_ENCODE) : Applies thenumerical_pipeline (imputation and scaling) to the columns listed inCOLS_TO_ENCODE . The string"numerical" is the name given to this transformer. -

("job_title", special_char_remover, ["job_title"]) : Applies thespecial_char_remover (ourFunctionTransformer ) to thejob_title column. -

("postal_code", PostalCodeBinTransformer(), ["postal_code"]) : Applies our customPostalCodeBinTransformer to thepostal_code column. -

("city", "passthrough", ["city"]) : Applies the"passthrough" strategy to thecity column , meaning this column will be kept without any transformation. -

remainder="drop" : This specifies what to do with any columns in the input DataFrame that are not explicitly mentioned in thetransformers list. In this case, we are dropping them (e.g., a potentialfirst_name column ). -

verbose_feature_names_out=False : This option controls whether the output feature names should be verbose (including the transformer name). We set it toFalse for cleaner output.

7. Creating the Second ColumnTransformer for One-Hot Encoding:

# One-Hot Encoding for categorical features

ohe_transformer = ColumnTransformer(

transformers=[

(

"ohe",

OneHotEncoder(handle_unknown="ignore", sparse_output=False),

["job_title", "city", "postal_code_bin"],

)

],

remainder="passthrough", # Keep numerical data

verbose_feature_names_out=False,

).set_output(transform="pandas")

We create another

-

("ohe", OneHotEncoder(handle_unknown="ignore", sparse_output=False), ["job_title", "city", "postal_code_bin"]) : Applies theOneHotEncoder to thejob_title ,city , andpostal_code_bin columns. -

handle_unknown="ignore" This tells the encoder to ignore unknown categories encountered during thetransform phase. -

sparse_output=False This ensures that the output of the One-Hot Encoder is a dense NumPy array rather than a sparse matrix. -

remainder="passthrough" This time, we set remainder to"passthrough" , meaning that any columns not specified in thetransformers list (which will be our processed numerical columns from the firstColumnTransformer ) will be passed through without any transformation. -

.set_output(transform="pandas") : This ensures the output is a pandas DataFrame.

8. Fitting and Transforming the Data:

preprocessed_data = preprocessor.fit_transform(raw_data)

preprocessed_df = pd.DataFrame(

preprocessed_data, columns=preprocessor.get_feature_names_out()

)

cleaned_data = ohe_transformer.fit_transform(preprocessed_df)

First, we fit the

Next, we fit the

9. Displaying Results:

# Display results

pd.set_option("display.max_columns", 8)

pd.set_option("display.expand_frame_repr", False)

print(cleaned_data.head())

Finally, we set some pandas display options to make the output more readable and print the head of the

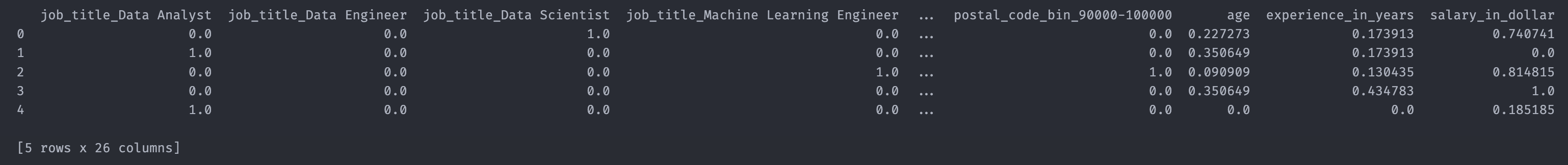

The final result stored in the `cleaned_data` dataframe

The final result stored in the `cleaned_data` dataframe

Elegance and Robustness

As you can see, the

The use of Pipelines within the

Conclusion

The